Website reindexing is the process where search engines revisit your website to update their database with any changes made to your site. That is an essential section of maintaining your site's visibility searching results because search engines rely on the index to supply users with relevant and updated content. Each time a new page is added, a current page is updated, or old content is removed, search engines need to re-crawl and reindex your website to ensure accurate representation searching results. If your website isn't reindexed regularly, your latest content or updates might not appear, potentially affecting your traffic and overall SEO performance.

Reindexing plays a vital role searching engine optimization (SEO). It ensures that search engines recognize and rank your website because of its latest changes, such as for example new keywords, fresh content, or technical improvements. Without proper reindexing, your website might remain outdated in the eyes of search engines, causing a fall in rankings. Like, when a business launches a new service or service, failing to make certain proper reindexing could mean that search engines won't show the new pages to potential customers. Regular Google reindex request allows your website to remain competitive in search results by reflecting the most accurate and relevant information.

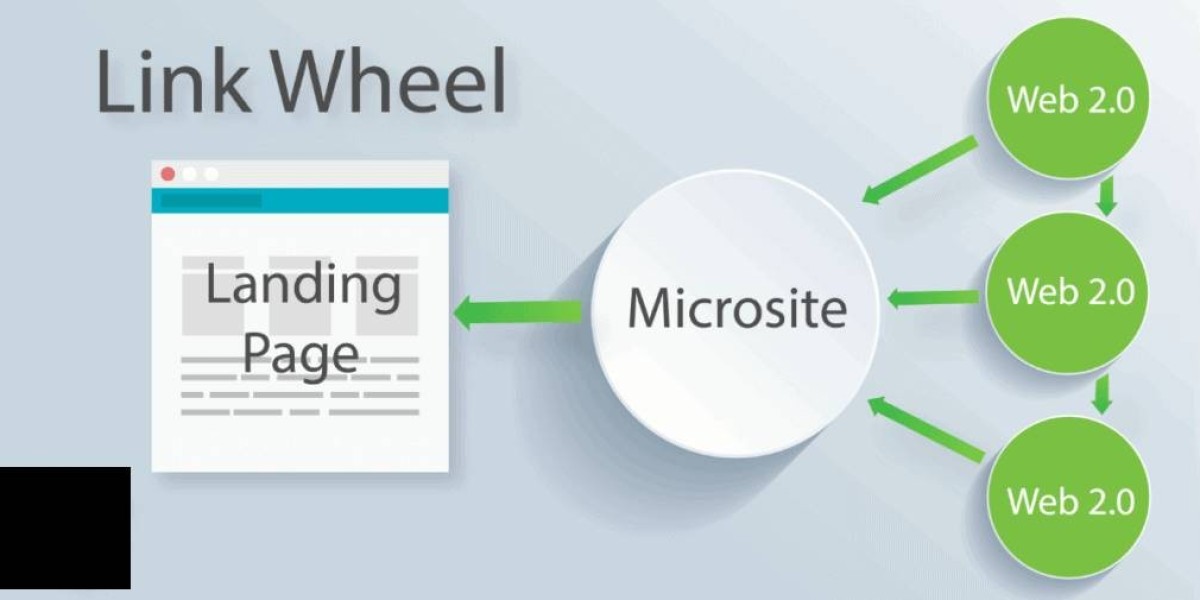

Search engines like Google and Bing use automated bots, often known as crawlers, to scan the web and update their index. When you make changes to your site, such as for instance publishing a post or updating your metadata, these bots will eventually find and crawl the changes during their routine scans. However, with regards to the size and complexity of your website, in addition to your crawl budget (the amount of pages a research engine is willing to crawl during certain period), the method may differ in speed. For this reason certain tools, such as for instance Google Search Console, allow website owners to manually request reindexing for faster updates.

Several factors influence how fast a website is reindexed by search engines. The structure of your website, its loading speed, the utilization of XML sitemaps, and the presence of broken links all play significant roles. Websites with efficient coding, minimal errors, and optimized content will probably get reindexed faster. Additionally, websites with frequent updates and high-quality content often attract crawlers more often. If search engines encounter issues such as for example slow-loading pages or outdated information, they might deprioritize the crawling of your website, delaying the reindexing process.

Tools like Google Search Console are invaluable for managing website reindexing. Through Search Console, you are able to inspect URLs to test their current indexing status and submit new or updated URLs for reindexing. This is particularly useful after making significant changes, such as redesigning your website or migrating to a brand new domain. Tools like XML sitemap generators and robots.txt files also help guide crawlers to the main pages of your site. Monitoring tools like Ahrefs or Screaming Frog can further assist in identifying indexing errors or pages that could be overlooked by search engines.